mirror of

https://github.com/musix-org/musix-oss

synced 2026-02-02 15:24:28 +00:00

opus

This commit is contained in:

37

node_modules/are-we-there-yet/CHANGES.md

generated

vendored

Normal file

37

node_modules/are-we-there-yet/CHANGES.md

generated

vendored

Normal file

@@ -0,0 +1,37 @@

|

||||

Hi, figured we could actually use a changelog now:

|

||||

|

||||

## 1.1.5 2018-05-24

|

||||

|

||||

* [#92](https://github.com/iarna/are-we-there-yet/pull/92) Fix bug where

|

||||

`finish` would throw errors when including `TrackerStream` objects in

|

||||

`TrackerGroup` collections. (@brianloveswords)

|

||||

|

||||

## 1.1.4 2017-04-21

|

||||

|

||||

* Fix typo in package.json

|

||||

|

||||

## 1.1.3 2017-04-21

|

||||

|

||||

* Improve documentation and limit files included in the distribution.

|

||||

|

||||

## 1.1.2 2016-03-15

|

||||

|

||||

* Add tracker group cycle detection and tests for it

|

||||

|

||||

## 1.1.1 2016-01-29

|

||||

|

||||

* Fix a typo in stream completion tracker

|

||||

|

||||

## 1.1.0 2016-01-29

|

||||

|

||||

* Rewrote completion percent computation to be low impact– no more walking a

|

||||

tree of completion groups every time we need this info. Previously, with

|

||||

medium sized tree of completion groups, even a relatively modest number of

|

||||

calls to the top level `completed()` method would result in absurd numbers

|

||||

of calls overall as it walked down the tree. We now, instead, keep track as

|

||||

we bubble up changes, so the computation is limited to when data changes and

|

||||

to the depth of that one branch, instead of _every_ node. (Plus, we were already

|

||||

incurring _this_ cost, since we already bubbled out changes.)

|

||||

* Moved different tracker types out to their own files.

|

||||

* Made tests test for TOO MANY events too.

|

||||

* Standarized the source code formatting

|

||||

5

node_modules/are-we-there-yet/LICENSE

generated

vendored

Normal file

5

node_modules/are-we-there-yet/LICENSE

generated

vendored

Normal file

@@ -0,0 +1,5 @@

|

||||

Copyright (c) 2015, Rebecca Turner

|

||||

|

||||

Permission to use, copy, modify, and/or distribute this software for any purpose with or without fee is hereby granted, provided that the above copyright notice and this permission notice appear in all copies.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS" AND THE AUTHOR DISCLAIMS ALL WARRANTIES WITH REGARD TO THIS SOFTWARE INCLUDING ALL IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS. IN NO EVENT SHALL THE AUTHOR BE LIABLE FOR ANY SPECIAL, DIRECT, INDIRECT, OR CONSEQUENTIAL DAMAGES OR ANY DAMAGES WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS, WHETHER IN AN ACTION OF CONTRACT, NEGLIGENCE OR OTHER TORTIOUS ACTION, ARISING OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS SOFTWARE.

|

||||

195

node_modules/are-we-there-yet/README.md

generated

vendored

Normal file

195

node_modules/are-we-there-yet/README.md

generated

vendored

Normal file

@@ -0,0 +1,195 @@

|

||||

are-we-there-yet

|

||||

----------------

|

||||

|

||||

Track complex hiearchies of asynchronous task completion statuses. This is

|

||||

intended to give you a way of recording and reporting the progress of the big

|

||||

recursive fan-out and gather type workflows that are so common in async.

|

||||

|

||||

What you do with this completion data is up to you, but the most common use case is to

|

||||

feed it to one of the many progress bar modules.

|

||||

|

||||

Most progress bar modules include a rudamentary version of this, but my

|

||||

needs were more complex.

|

||||

|

||||

Usage

|

||||

=====

|

||||

|

||||

```javascript

|

||||

var TrackerGroup = require("are-we-there-yet").TrackerGroup

|

||||

|

||||

var top = new TrackerGroup("program")

|

||||

|

||||

var single = top.newItem("one thing", 100)

|

||||

single.completeWork(20)

|

||||

|

||||

console.log(top.completed()) // 0.2

|

||||

|

||||

fs.stat("file", function(er, stat) {

|

||||

if (er) throw er

|

||||

var stream = top.newStream("file", stat.size)

|

||||

console.log(top.completed()) // now 0.1 as single is 50% of the job and is 20% complete

|

||||

// and 50% * 20% == 10%

|

||||

fs.createReadStream("file").pipe(stream).on("data", function (chunk) {

|

||||

// do stuff with chunk

|

||||

})

|

||||

top.on("change", function (name) {

|

||||

// called each time a chunk is read from "file"

|

||||

// top.completed() will start at 0.1 and fill up to 0.6 as the file is read

|

||||

})

|

||||

})

|

||||

```

|

||||

|

||||

Shared Methods

|

||||

==============

|

||||

|

||||

* var completed = tracker.completed()

|

||||

|

||||

Implemented in: `Tracker`, `TrackerGroup`, `TrackerStream`

|

||||

|

||||

Returns the ratio of completed work to work to be done. Range of 0 to 1.

|

||||

|

||||

* tracker.finish()

|

||||

|

||||

Implemented in: `Tracker`, `TrackerGroup`

|

||||

|

||||

Marks the tracker as completed. With a TrackerGroup this marks all of its

|

||||

components as completed.

|

||||

|

||||

Marks all of the components of this tracker as finished, which in turn means

|

||||

that `tracker.completed()` for this will now be 1.

|

||||

|

||||

This will result in one or more `change` events being emitted.

|

||||

|

||||

Events

|

||||

======

|

||||

|

||||

All tracker objects emit `change` events with the following arguments:

|

||||

|

||||

```

|

||||

function (name, completed, tracker)

|

||||

```

|

||||

|

||||

`name` is the name of the tracker that originally emitted the event,

|

||||

or if it didn't have one, the first containing tracker group that had one.

|

||||

|

||||

`completed` is the percent complete (as returned by `tracker.completed()` method).

|

||||

|

||||

`tracker` is the tracker object that you are listening for events on.

|

||||

|

||||

TrackerGroup

|

||||

============

|

||||

|

||||

* var tracker = new TrackerGroup(**name**)

|

||||

|

||||

* **name** *(optional)* - The name of this tracker group, used in change

|

||||

notifications if the component updating didn't have a name. Defaults to undefined.

|

||||

|

||||

Creates a new empty tracker aggregation group. These are trackers whose

|

||||

completion status is determined by the completion status of other trackers.

|

||||

|

||||

* tracker.addUnit(**otherTracker**, **weight**)

|

||||

|

||||

* **otherTracker** - Any of the other are-we-there-yet tracker objects

|

||||

* **weight** *(optional)* - The weight to give the tracker, defaults to 1.

|

||||

|

||||

Adds the **otherTracker** to this aggregation group. The weight determines

|

||||

how long you expect this tracker to take to complete in proportion to other

|

||||

units. So for instance, if you add one tracker with a weight of 1 and

|

||||

another with a weight of 2, you're saying the second will take twice as long

|

||||

to complete as the first. As such, the first will account for 33% of the

|

||||

completion of this tracker and the second will account for the other 67%.

|

||||

|

||||

Returns **otherTracker**.

|

||||

|

||||

* var subGroup = tracker.newGroup(**name**, **weight**)

|

||||

|

||||

The above is exactly equivalent to:

|

||||

|

||||

```javascript

|

||||

var subGroup = tracker.addUnit(new TrackerGroup(name), weight)

|

||||

```

|

||||

|

||||

* var subItem = tracker.newItem(**name**, **todo**, **weight**)

|

||||

|

||||

The above is exactly equivalent to:

|

||||

|

||||

```javascript

|

||||

var subItem = tracker.addUnit(new Tracker(name, todo), weight)

|

||||

```

|

||||

|

||||

* var subStream = tracker.newStream(**name**, **todo**, **weight**)

|

||||

|

||||

The above is exactly equivalent to:

|

||||

|

||||

```javascript

|

||||

var subStream = tracker.addUnit(new TrackerStream(name, todo), weight)

|

||||

```

|

||||

|

||||

* console.log( tracker.debug() )

|

||||

|

||||

Returns a tree showing the completion of this tracker group and all of its

|

||||

children, including recursively entering all of the children.

|

||||

|

||||

Tracker

|

||||

=======

|

||||

|

||||

* var tracker = new Tracker(**name**, **todo**)

|

||||

|

||||

* **name** *(optional)* The name of this counter to report in change

|

||||

events. Defaults to undefined.

|

||||

* **todo** *(optional)* The amount of work todo (a number). Defaults to 0.

|

||||

|

||||

Ordinarily these are constructed as a part of a tracker group (via

|

||||

`newItem`).

|

||||

|

||||

* var completed = tracker.completed()

|

||||

|

||||

Returns the ratio of completed work to work to be done. Range of 0 to 1. If

|

||||

total work to be done is 0 then it will return 0.

|

||||

|

||||

* tracker.addWork(**todo**)

|

||||

|

||||

* **todo** A number to add to the amount of work to be done.

|

||||

|

||||

Increases the amount of work to be done, thus decreasing the completion

|

||||

percentage. Triggers a `change` event.

|

||||

|

||||

* tracker.completeWork(**completed**)

|

||||

|

||||

* **completed** A number to add to the work complete

|

||||

|

||||

Increase the amount of work complete, thus increasing the completion percentage.

|

||||

Will never increase the work completed past the amount of work todo. That is,

|

||||

percentages > 100% are not allowed. Triggers a `change` event.

|

||||

|

||||

* tracker.finish()

|

||||

|

||||

Marks this tracker as finished, tracker.completed() will now be 1. Triggers

|

||||

a `change` event.

|

||||

|

||||

TrackerStream

|

||||

=============

|

||||

|

||||

* var tracker = new TrackerStream(**name**, **size**, **options**)

|

||||

|

||||

* **name** *(optional)* The name of this counter to report in change

|

||||

events. Defaults to undefined.

|

||||

* **size** *(optional)* The number of bytes being sent through this stream.

|

||||

* **options** *(optional)* A hash of stream options

|

||||

|

||||

The tracker stream object is a pass through stream that updates an internal

|

||||

tracker object each time a block passes through. It's intended to track

|

||||

downloads, file extraction and other related activities. You use it by piping

|

||||

your data source into it and then using it as your data source.

|

||||

|

||||

If your data has a length attribute then that's used as the amount of work

|

||||

completed when the chunk is passed through. If it does not (eg, object

|

||||

streams) then each chunk counts as completing 1 unit of work, so your size

|

||||

should be the total number of objects being streamed.

|

||||

|

||||

* tracker.addWork(**todo**)

|

||||

|

||||

* **todo** Increase the expected overall size by **todo** bytes.

|

||||

|

||||

Increases the amount of work to be done, thus decreasing the completion

|

||||

percentage. Triggers a `change` event.

|

||||

4

node_modules/are-we-there-yet/index.js

generated

vendored

Normal file

4

node_modules/are-we-there-yet/index.js

generated

vendored

Normal file

@@ -0,0 +1,4 @@

|

||||

'use strict'

|

||||

exports.TrackerGroup = require('./tracker-group.js')

|

||||

exports.Tracker = require('./tracker.js')

|

||||

exports.TrackerStream = require('./tracker-stream.js')

|

||||

1

node_modules/are-we-there-yet/node_modules/isarray/.npmignore

generated

vendored

Normal file

1

node_modules/are-we-there-yet/node_modules/isarray/.npmignore

generated

vendored

Normal file

@@ -0,0 +1 @@

|

||||

node_modules

|

||||

4

node_modules/are-we-there-yet/node_modules/isarray/.travis.yml

generated

vendored

Normal file

4

node_modules/are-we-there-yet/node_modules/isarray/.travis.yml

generated

vendored

Normal file

@@ -0,0 +1,4 @@

|

||||

language: node_js

|

||||

node_js:

|

||||

- "0.8"

|

||||

- "0.10"

|

||||

6

node_modules/are-we-there-yet/node_modules/isarray/Makefile

generated

vendored

Normal file

6

node_modules/are-we-there-yet/node_modules/isarray/Makefile

generated

vendored

Normal file

@@ -0,0 +1,6 @@

|

||||

|

||||

test:

|

||||

@node_modules/.bin/tape test.js

|

||||

|

||||

.PHONY: test

|

||||

|

||||

60

node_modules/are-we-there-yet/node_modules/isarray/README.md

generated

vendored

Normal file

60

node_modules/are-we-there-yet/node_modules/isarray/README.md

generated

vendored

Normal file

@@ -0,0 +1,60 @@

|

||||

|

||||

# isarray

|

||||

|

||||

`Array#isArray` for older browsers.

|

||||

|

||||

[](http://travis-ci.org/juliangruber/isarray)

|

||||

[](https://www.npmjs.org/package/isarray)

|

||||

|

||||

[

|

||||

](https://ci.testling.com/juliangruber/isarray)

|

||||

|

||||

## Usage

|

||||

|

||||

```js

|

||||

var isArray = require('isarray');

|

||||

|

||||

console.log(isArray([])); // => true

|

||||

console.log(isArray({})); // => false

|

||||

```

|

||||

|

||||

## Installation

|

||||

|

||||

With [npm](http://npmjs.org) do

|

||||

|

||||

```bash

|

||||

$ npm install isarray

|

||||

```

|

||||

|

||||

Then bundle for the browser with

|

||||

[browserify](https://github.com/substack/browserify).

|

||||

|

||||

With [component](http://component.io) do

|

||||

|

||||

```bash

|

||||

$ component install juliangruber/isarray

|

||||

```

|

||||

|

||||

## License

|

||||

|

||||

(MIT)

|

||||

|

||||

Copyright (c) 2013 Julian Gruber <julian@juliangruber.com>

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy of

|

||||

this software and associated documentation files (the "Software"), to deal in

|

||||

the Software without restriction, including without limitation the rights to

|

||||

use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies

|

||||

of the Software, and to permit persons to whom the Software is furnished to do

|

||||

so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

19

node_modules/are-we-there-yet/node_modules/isarray/component.json

generated

vendored

Normal file

19

node_modules/are-we-there-yet/node_modules/isarray/component.json

generated

vendored

Normal file

@@ -0,0 +1,19 @@

|

||||

{

|

||||

"name" : "isarray",

|

||||

"description" : "Array#isArray for older browsers",

|

||||

"version" : "0.0.1",

|

||||

"repository" : "juliangruber/isarray",

|

||||

"homepage": "https://github.com/juliangruber/isarray",

|

||||

"main" : "index.js",

|

||||

"scripts" : [

|

||||

"index.js"

|

||||

],

|

||||

"dependencies" : {},

|

||||

"keywords": ["browser","isarray","array"],

|

||||

"author": {

|

||||

"name": "Julian Gruber",

|

||||

"email": "mail@juliangruber.com",

|

||||

"url": "http://juliangruber.com"

|

||||

},

|

||||

"license": "MIT"

|

||||

}

|

||||

5

node_modules/are-we-there-yet/node_modules/isarray/index.js

generated

vendored

Normal file

5

node_modules/are-we-there-yet/node_modules/isarray/index.js

generated

vendored

Normal file

@@ -0,0 +1,5 @@

|

||||

var toString = {}.toString;

|

||||

|

||||

module.exports = Array.isArray || function (arr) {

|

||||

return toString.call(arr) == '[object Array]';

|

||||

};

|

||||

73

node_modules/are-we-there-yet/node_modules/isarray/package.json

generated

vendored

Normal file

73

node_modules/are-we-there-yet/node_modules/isarray/package.json

generated

vendored

Normal file

@@ -0,0 +1,73 @@

|

||||

{

|

||||

"_from": "isarray@~1.0.0",

|

||||

"_id": "isarray@1.0.0",

|

||||

"_inBundle": false,

|

||||

"_integrity": "sha1-u5NdSFgsuhaMBoNJV6VKPgcSTxE=",

|

||||

"_location": "/are-we-there-yet/isarray",

|

||||

"_phantomChildren": {},

|

||||

"_requested": {

|

||||

"type": "range",

|

||||

"registry": true,

|

||||

"raw": "isarray@~1.0.0",

|

||||

"name": "isarray",

|

||||

"escapedName": "isarray",

|

||||

"rawSpec": "~1.0.0",

|

||||

"saveSpec": null,

|

||||

"fetchSpec": "~1.0.0"

|

||||

},

|

||||

"_requiredBy": [

|

||||

"/are-we-there-yet/readable-stream"

|

||||

],

|

||||

"_resolved": "https://registry.npmjs.org/isarray/-/isarray-1.0.0.tgz",

|

||||

"_shasum": "bb935d48582cba168c06834957a54a3e07124f11",

|

||||

"_spec": "isarray@~1.0.0",

|

||||

"_where": "C:\\Users\\matia\\Documents\\GitHub\\Musix-V3\\node_modules\\are-we-there-yet\\node_modules\\readable-stream",

|

||||

"author": {

|

||||

"name": "Julian Gruber",

|

||||

"email": "mail@juliangruber.com",

|

||||

"url": "http://juliangruber.com"

|

||||

},

|

||||

"bugs": {

|

||||

"url": "https://github.com/juliangruber/isarray/issues"

|

||||

},

|

||||

"bundleDependencies": false,

|

||||

"dependencies": {},

|

||||

"deprecated": false,

|

||||

"description": "Array#isArray for older browsers",

|

||||

"devDependencies": {

|

||||

"tape": "~2.13.4"

|

||||

},

|

||||

"homepage": "https://github.com/juliangruber/isarray",

|

||||

"keywords": [

|

||||

"browser",

|

||||

"isarray",

|

||||

"array"

|

||||

],

|

||||

"license": "MIT",

|

||||

"main": "index.js",

|

||||

"name": "isarray",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

"url": "git://github.com/juliangruber/isarray.git"

|

||||

},

|

||||

"scripts": {

|

||||

"test": "tape test.js"

|

||||

},

|

||||

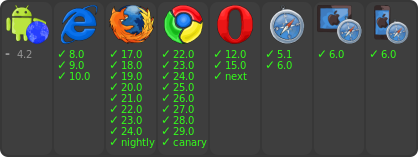

"testling": {

|

||||

"files": "test.js",

|

||||

"browsers": [

|

||||

"ie/8..latest",

|

||||

"firefox/17..latest",

|

||||

"firefox/nightly",

|

||||

"chrome/22..latest",

|

||||

"chrome/canary",

|

||||

"opera/12..latest",

|

||||

"opera/next",

|

||||

"safari/5.1..latest",

|

||||

"ipad/6.0..latest",

|

||||

"iphone/6.0..latest",

|

||||

"android-browser/4.2..latest"

|

||||

]

|

||||

},

|

||||

"version": "1.0.0"

|

||||

}

|

||||

20

node_modules/are-we-there-yet/node_modules/isarray/test.js

generated

vendored

Normal file

20

node_modules/are-we-there-yet/node_modules/isarray/test.js

generated

vendored

Normal file

@@ -0,0 +1,20 @@

|

||||

var isArray = require('./');

|

||||

var test = require('tape');

|

||||

|

||||

test('is array', function(t){

|

||||

t.ok(isArray([]));

|

||||

t.notOk(isArray({}));

|

||||

t.notOk(isArray(null));

|

||||

t.notOk(isArray(false));

|

||||

|

||||

var obj = {};

|

||||

obj[0] = true;

|

||||

t.notOk(isArray(obj));

|

||||

|

||||

var arr = [];

|

||||

arr.foo = 'bar';

|

||||

t.ok(isArray(arr));

|

||||

|

||||

t.end();

|

||||

});

|

||||

|

||||

34

node_modules/are-we-there-yet/node_modules/readable-stream/.travis.yml

generated

vendored

Normal file

34

node_modules/are-we-there-yet/node_modules/readable-stream/.travis.yml

generated

vendored

Normal file

@@ -0,0 +1,34 @@

|

||||

sudo: false

|

||||

language: node_js

|

||||

before_install:

|

||||

- (test $NPM_LEGACY && npm install -g npm@2 && npm install -g npm@3) || true

|

||||

notifications:

|

||||

email: false

|

||||

matrix:

|

||||

fast_finish: true

|

||||

include:

|

||||

- node_js: '0.8'

|

||||

env: NPM_LEGACY=true

|

||||

- node_js: '0.10'

|

||||

env: NPM_LEGACY=true

|

||||

- node_js: '0.11'

|

||||

env: NPM_LEGACY=true

|

||||

- node_js: '0.12'

|

||||

env: NPM_LEGACY=true

|

||||

- node_js: 1

|

||||

env: NPM_LEGACY=true

|

||||

- node_js: 2

|

||||

env: NPM_LEGACY=true

|

||||

- node_js: 3

|

||||

env: NPM_LEGACY=true

|

||||

- node_js: 4

|

||||

- node_js: 5

|

||||

- node_js: 6

|

||||

- node_js: 7

|

||||

- node_js: 8

|

||||

- node_js: 9

|

||||

script: "npm run test"

|

||||

env:

|

||||

global:

|

||||

- secure: rE2Vvo7vnjabYNULNyLFxOyt98BoJexDqsiOnfiD6kLYYsiQGfr/sbZkPMOFm9qfQG7pjqx+zZWZjGSswhTt+626C0t/njXqug7Yps4c3dFblzGfreQHp7wNX5TFsvrxd6dAowVasMp61sJcRnB2w8cUzoe3RAYUDHyiHktwqMc=

|

||||

- secure: g9YINaKAdMatsJ28G9jCGbSaguXCyxSTy+pBO6Ch0Cf57ZLOTka3HqDj8p3nV28LUIHZ3ut5WO43CeYKwt4AUtLpBS3a0dndHdY6D83uY6b2qh5hXlrcbeQTq2cvw2y95F7hm4D1kwrgZ7ViqaKggRcEupAL69YbJnxeUDKWEdI=

|

||||

38

node_modules/are-we-there-yet/node_modules/readable-stream/CONTRIBUTING.md

generated

vendored

Normal file

38

node_modules/are-we-there-yet/node_modules/readable-stream/CONTRIBUTING.md

generated

vendored

Normal file

@@ -0,0 +1,38 @@

|

||||

# Developer's Certificate of Origin 1.1

|

||||

|

||||

By making a contribution to this project, I certify that:

|

||||

|

||||

* (a) The contribution was created in whole or in part by me and I

|

||||

have the right to submit it under the open source license

|

||||

indicated in the file; or

|

||||

|

||||

* (b) The contribution is based upon previous work that, to the best

|

||||

of my knowledge, is covered under an appropriate open source

|

||||

license and I have the right under that license to submit that

|

||||

work with modifications, whether created in whole or in part

|

||||

by me, under the same open source license (unless I am

|

||||

permitted to submit under a different license), as indicated

|

||||

in the file; or

|

||||

|

||||

* (c) The contribution was provided directly to me by some other

|

||||

person who certified (a), (b) or (c) and I have not modified

|

||||

it.

|

||||

|

||||

* (d) I understand and agree that this project and the contribution

|

||||

are public and that a record of the contribution (including all

|

||||

personal information I submit with it, including my sign-off) is

|

||||

maintained indefinitely and may be redistributed consistent with

|

||||

this project or the open source license(s) involved.

|

||||

|

||||

## Moderation Policy

|

||||

|

||||

The [Node.js Moderation Policy] applies to this WG.

|

||||

|

||||

## Code of Conduct

|

||||

|

||||

The [Node.js Code of Conduct][] applies to this WG.

|

||||

|

||||

[Node.js Code of Conduct]:

|

||||

https://github.com/nodejs/node/blob/master/CODE_OF_CONDUCT.md

|

||||

[Node.js Moderation Policy]:

|

||||

https://github.com/nodejs/TSC/blob/master/Moderation-Policy.md

|

||||

136

node_modules/are-we-there-yet/node_modules/readable-stream/GOVERNANCE.md

generated

vendored

Normal file

136

node_modules/are-we-there-yet/node_modules/readable-stream/GOVERNANCE.md

generated

vendored

Normal file

@@ -0,0 +1,136 @@

|

||||

### Streams Working Group

|

||||

|

||||

The Node.js Streams is jointly governed by a Working Group

|

||||

(WG)

|

||||

that is responsible for high-level guidance of the project.

|

||||

|

||||

The WG has final authority over this project including:

|

||||

|

||||

* Technical direction

|

||||

* Project governance and process (including this policy)

|

||||

* Contribution policy

|

||||

* GitHub repository hosting

|

||||

* Conduct guidelines

|

||||

* Maintaining the list of additional Collaborators

|

||||

|

||||

For the current list of WG members, see the project

|

||||

[README.md](./README.md#current-project-team-members).

|

||||

|

||||

### Collaborators

|

||||

|

||||

The readable-stream GitHub repository is

|

||||

maintained by the WG and additional Collaborators who are added by the

|

||||

WG on an ongoing basis.

|

||||

|

||||

Individuals making significant and valuable contributions are made

|

||||

Collaborators and given commit-access to the project. These

|

||||

individuals are identified by the WG and their addition as

|

||||

Collaborators is discussed during the WG meeting.

|

||||

|

||||

_Note:_ If you make a significant contribution and are not considered

|

||||

for commit-access log an issue or contact a WG member directly and it

|

||||

will be brought up in the next WG meeting.

|

||||

|

||||

Modifications of the contents of the readable-stream repository are

|

||||

made on

|

||||

a collaborative basis. Anybody with a GitHub account may propose a

|

||||

modification via pull request and it will be considered by the project

|

||||

Collaborators. All pull requests must be reviewed and accepted by a

|

||||

Collaborator with sufficient expertise who is able to take full

|

||||

responsibility for the change. In the case of pull requests proposed

|

||||

by an existing Collaborator, an additional Collaborator is required

|

||||

for sign-off. Consensus should be sought if additional Collaborators

|

||||

participate and there is disagreement around a particular

|

||||

modification. See _Consensus Seeking Process_ below for further detail

|

||||

on the consensus model used for governance.

|

||||

|

||||

Collaborators may opt to elevate significant or controversial

|

||||

modifications, or modifications that have not found consensus to the

|

||||

WG for discussion by assigning the ***WG-agenda*** tag to a pull

|

||||

request or issue. The WG should serve as the final arbiter where

|

||||

required.

|

||||

|

||||

For the current list of Collaborators, see the project

|

||||

[README.md](./README.md#members).

|

||||

|

||||

### WG Membership

|

||||

|

||||

WG seats are not time-limited. There is no fixed size of the WG.

|

||||

However, the expected target is between 6 and 12, to ensure adequate

|

||||

coverage of important areas of expertise, balanced with the ability to

|

||||

make decisions efficiently.

|

||||

|

||||

There is no specific set of requirements or qualifications for WG

|

||||

membership beyond these rules.

|

||||

|

||||

The WG may add additional members to the WG by unanimous consensus.

|

||||

|

||||

A WG member may be removed from the WG by voluntary resignation, or by

|

||||

unanimous consensus of all other WG members.

|

||||

|

||||

Changes to WG membership should be posted in the agenda, and may be

|

||||

suggested as any other agenda item (see "WG Meetings" below).

|

||||

|

||||

If an addition or removal is proposed during a meeting, and the full

|

||||

WG is not in attendance to participate, then the addition or removal

|

||||

is added to the agenda for the subsequent meeting. This is to ensure

|

||||

that all members are given the opportunity to participate in all

|

||||

membership decisions. If a WG member is unable to attend a meeting

|

||||

where a planned membership decision is being made, then their consent

|

||||

is assumed.

|

||||

|

||||

No more than 1/3 of the WG members may be affiliated with the same

|

||||

employer. If removal or resignation of a WG member, or a change of

|

||||

employment by a WG member, creates a situation where more than 1/3 of

|

||||

the WG membership shares an employer, then the situation must be

|

||||

immediately remedied by the resignation or removal of one or more WG

|

||||

members affiliated with the over-represented employer(s).

|

||||

|

||||

### WG Meetings

|

||||

|

||||

The WG meets occasionally on a Google Hangout On Air. A designated moderator

|

||||

approved by the WG runs the meeting. Each meeting should be

|

||||

published to YouTube.

|

||||

|

||||

Items are added to the WG agenda that are considered contentious or

|

||||

are modifications of governance, contribution policy, WG membership,

|

||||

or release process.

|

||||

|

||||

The intention of the agenda is not to approve or review all patches;

|

||||

that should happen continuously on GitHub and be handled by the larger

|

||||

group of Collaborators.

|

||||

|

||||

Any community member or contributor can ask that something be added to

|

||||

the next meeting's agenda by logging a GitHub Issue. Any Collaborator,

|

||||

WG member or the moderator can add the item to the agenda by adding

|

||||

the ***WG-agenda*** tag to the issue.

|

||||

|

||||

Prior to each WG meeting the moderator will share the Agenda with

|

||||

members of the WG. WG members can add any items they like to the

|

||||

agenda at the beginning of each meeting. The moderator and the WG

|

||||

cannot veto or remove items.

|

||||

|

||||

The WG may invite persons or representatives from certain projects to

|

||||

participate in a non-voting capacity.

|

||||

|

||||

The moderator is responsible for summarizing the discussion of each

|

||||

agenda item and sends it as a pull request after the meeting.

|

||||

|

||||

### Consensus Seeking Process

|

||||

|

||||

The WG follows a

|

||||

[Consensus

|

||||

Seeking](http://en.wikipedia.org/wiki/Consensus-seeking_decision-making)

|

||||

decision-making model.

|

||||

|

||||

When an agenda item has appeared to reach a consensus the moderator

|

||||

will ask "Does anyone object?" as a final call for dissent from the

|

||||

consensus.

|

||||

|

||||

If an agenda item cannot reach a consensus a WG member can call for

|

||||

either a closing vote or a vote to table the issue to the next

|

||||

meeting. The call for a vote must be seconded by a majority of the WG

|

||||

or else the discussion will continue. Simple majority wins.

|

||||

|

||||

Note that changes to WG membership require a majority consensus. See

|

||||

"WG Membership" above.

|

||||

47

node_modules/are-we-there-yet/node_modules/readable-stream/LICENSE

generated

vendored

Normal file

47

node_modules/are-we-there-yet/node_modules/readable-stream/LICENSE

generated

vendored

Normal file

@@ -0,0 +1,47 @@

|

||||

Node.js is licensed for use as follows:

|

||||

|

||||

"""

|

||||

Copyright Node.js contributors. All rights reserved.

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to

|

||||

deal in the Software without restriction, including without limitation the

|

||||

rights to use, copy, modify, merge, publish, distribute, sublicense, and/or

|

||||

sell copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in

|

||||

all copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

|

||||

FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

|

||||

IN THE SOFTWARE.

|

||||

"""

|

||||

|

||||

This license applies to parts of Node.js originating from the

|

||||

https://github.com/joyent/node repository:

|

||||

|

||||

"""

|

||||

Copyright Joyent, Inc. and other Node contributors. All rights reserved.

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to

|

||||

deal in the Software without restriction, including without limitation the

|

||||

rights to use, copy, modify, merge, publish, distribute, sublicense, and/or

|

||||

sell copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in

|

||||

all copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

|

||||

FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

|

||||

IN THE SOFTWARE.

|

||||

"""

|

||||

58

node_modules/are-we-there-yet/node_modules/readable-stream/README.md

generated

vendored

Normal file

58

node_modules/are-we-there-yet/node_modules/readable-stream/README.md

generated

vendored

Normal file

@@ -0,0 +1,58 @@

|

||||

# readable-stream

|

||||

|

||||

***Node-core v8.11.1 streams for userland*** [](https://travis-ci.org/nodejs/readable-stream)

|

||||

|

||||

|

||||

[](https://nodei.co/npm/readable-stream/)

|

||||

[](https://nodei.co/npm/readable-stream/)

|

||||

|

||||

|

||||

[](https://saucelabs.com/u/readable-stream)

|

||||

|

||||

```bash

|

||||

npm install --save readable-stream

|

||||

```

|

||||

|

||||

***Node-core streams for userland***

|

||||

|

||||

This package is a mirror of the Streams2 and Streams3 implementations in

|

||||

Node-core.

|

||||

|

||||

Full documentation may be found on the [Node.js website](https://nodejs.org/dist/v8.11.1/docs/api/stream.html).

|

||||

|

||||

If you want to guarantee a stable streams base, regardless of what version of

|

||||

Node you, or the users of your libraries are using, use **readable-stream** *only* and avoid the *"stream"* module in Node-core, for background see [this blogpost](http://r.va.gg/2014/06/why-i-dont-use-nodes-core-stream-module.html).

|

||||

|

||||

As of version 2.0.0 **readable-stream** uses semantic versioning.

|

||||

|

||||

# Streams Working Group

|

||||

|

||||

`readable-stream` is maintained by the Streams Working Group, which

|

||||

oversees the development and maintenance of the Streams API within

|

||||

Node.js. The responsibilities of the Streams Working Group include:

|

||||

|

||||

* Addressing stream issues on the Node.js issue tracker.

|

||||

* Authoring and editing stream documentation within the Node.js project.

|

||||

* Reviewing changes to stream subclasses within the Node.js project.

|

||||

* Redirecting changes to streams from the Node.js project to this

|

||||

project.

|

||||

* Assisting in the implementation of stream providers within Node.js.

|

||||

* Recommending versions of `readable-stream` to be included in Node.js.

|

||||

* Messaging about the future of streams to give the community advance

|

||||

notice of changes.

|

||||

|

||||

<a name="members"></a>

|

||||

## Team Members

|

||||

|

||||

* **Chris Dickinson** ([@chrisdickinson](https://github.com/chrisdickinson)) <christopher.s.dickinson@gmail.com>

|

||||

- Release GPG key: 9554F04D7259F04124DE6B476D5A82AC7E37093B

|

||||

* **Calvin Metcalf** ([@calvinmetcalf](https://github.com/calvinmetcalf)) <calvin.metcalf@gmail.com>

|

||||

- Release GPG key: F3EF5F62A87FC27A22E643F714CE4FF5015AA242

|

||||

* **Rod Vagg** ([@rvagg](https://github.com/rvagg)) <rod@vagg.org>

|

||||

- Release GPG key: DD8F2338BAE7501E3DD5AC78C273792F7D83545D

|

||||

* **Sam Newman** ([@sonewman](https://github.com/sonewman)) <newmansam@outlook.com>

|

||||

* **Mathias Buus** ([@mafintosh](https://github.com/mafintosh)) <mathiasbuus@gmail.com>

|

||||

* **Domenic Denicola** ([@domenic](https://github.com/domenic)) <d@domenic.me>

|

||||

* **Matteo Collina** ([@mcollina](https://github.com/mcollina)) <matteo.collina@gmail.com>

|

||||

- Release GPG key: 3ABC01543F22DD2239285CDD818674489FBC127E

|

||||

* **Irina Shestak** ([@lrlna](https://github.com/lrlna)) <shestak.irina@gmail.com>

|

||||

60

node_modules/are-we-there-yet/node_modules/readable-stream/doc/wg-meetings/2015-01-30.md

generated

vendored

Normal file

60

node_modules/are-we-there-yet/node_modules/readable-stream/doc/wg-meetings/2015-01-30.md

generated

vendored

Normal file

@@ -0,0 +1,60 @@

|

||||

# streams WG Meeting 2015-01-30

|

||||

|

||||

## Links

|

||||

|

||||

* **Google Hangouts Video**: http://www.youtube.com/watch?v=I9nDOSGfwZg

|

||||

* **GitHub Issue**: https://github.com/iojs/readable-stream/issues/106

|

||||

* **Original Minutes Google Doc**: https://docs.google.com/document/d/17aTgLnjMXIrfjgNaTUnHQO7m3xgzHR2VXBTmi03Qii4/

|

||||

|

||||

## Agenda

|

||||

|

||||

Extracted from https://github.com/iojs/readable-stream/labels/wg-agenda prior to meeting.

|

||||

|

||||

* adopt a charter [#105](https://github.com/iojs/readable-stream/issues/105)

|

||||

* release and versioning strategy [#101](https://github.com/iojs/readable-stream/issues/101)

|

||||

* simpler stream creation [#102](https://github.com/iojs/readable-stream/issues/102)

|

||||

* proposal: deprecate implicit flowing of streams [#99](https://github.com/iojs/readable-stream/issues/99)

|

||||

|

||||

## Minutes

|

||||

|

||||

### adopt a charter

|

||||

|

||||

* group: +1's all around

|

||||

|

||||

### What versioning scheme should be adopted?

|

||||

* group: +1’s 3.0.0

|

||||

* domenic+group: pulling in patches from other sources where appropriate

|

||||

* mikeal: version independently, suggesting versions for io.js

|

||||

* mikeal+domenic: work with TC to notify in advance of changes

|

||||

simpler stream creation

|

||||

|

||||

### streamline creation of streams

|

||||

* sam: streamline creation of streams

|

||||

* domenic: nice simple solution posted

|

||||

but, we lose the opportunity to change the model

|

||||

may not be backwards incompatible (double check keys)

|

||||

|

||||

**action item:** domenic will check

|

||||

|

||||

### remove implicit flowing of streams on(‘data’)

|

||||

* add isFlowing / isPaused

|

||||

* mikeal: worrying that we’re documenting polyfill methods – confuses users

|

||||

* domenic: more reflective API is probably good, with warning labels for users

|

||||

* new section for mad scientists (reflective stream access)

|

||||

* calvin: name the “third state”

|

||||

* mikeal: maybe borrow the name from whatwg?

|

||||

* domenic: we’re missing the “third state”

|

||||

* consensus: kind of difficult to name the third state

|

||||

* mikeal: figure out differences in states / compat

|

||||

* mathias: always flow on data – eliminates third state

|

||||

* explore what it breaks

|

||||

|

||||

**action items:**

|

||||

* ask isaac for ability to list packages by what public io.js APIs they use (esp. Stream)

|

||||

* ask rod/build for infrastructure

|

||||

* **chris**: explore the “flow on data” approach

|

||||

* add isPaused/isFlowing

|

||||

* add new docs section

|

||||

* move isPaused to that section

|

||||

|

||||

|

||||

1

node_modules/are-we-there-yet/node_modules/readable-stream/duplex-browser.js

generated

vendored

Normal file

1

node_modules/are-we-there-yet/node_modules/readable-stream/duplex-browser.js

generated

vendored

Normal file

@@ -0,0 +1 @@

|

||||

module.exports = require('./lib/_stream_duplex.js');

|

||||

1

node_modules/are-we-there-yet/node_modules/readable-stream/duplex.js

generated

vendored

Normal file

1

node_modules/are-we-there-yet/node_modules/readable-stream/duplex.js

generated

vendored

Normal file

@@ -0,0 +1 @@

|

||||

module.exports = require('./readable').Duplex

|

||||

131

node_modules/are-we-there-yet/node_modules/readable-stream/lib/_stream_duplex.js

generated

vendored

Normal file

131

node_modules/are-we-there-yet/node_modules/readable-stream/lib/_stream_duplex.js

generated

vendored

Normal file

@@ -0,0 +1,131 @@

|

||||

// Copyright Joyent, Inc. and other Node contributors.

|

||||

//

|

||||

// Permission is hereby granted, free of charge, to any person obtaining a

|

||||

// copy of this software and associated documentation files (the

|

||||

// "Software"), to deal in the Software without restriction, including

|

||||

// without limitation the rights to use, copy, modify, merge, publish,

|

||||

// distribute, sublicense, and/or sell copies of the Software, and to permit

|

||||

// persons to whom the Software is furnished to do so, subject to the

|

||||

// following conditions:

|

||||

//

|

||||

// The above copyright notice and this permission notice shall be included

|

||||

// in all copies or substantial portions of the Software.

|

||||

//

|

||||

// THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS

|

||||

// OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF

|

||||

// MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN

|

||||

// NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM,

|

||||

// DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR

|

||||

// OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE

|

||||

// USE OR OTHER DEALINGS IN THE SOFTWARE.

|

||||

|

||||

// a duplex stream is just a stream that is both readable and writable.

|

||||

// Since JS doesn't have multiple prototypal inheritance, this class

|

||||

// prototypally inherits from Readable, and then parasitically from

|

||||

// Writable.

|

||||

|

||||

'use strict';

|

||||

|

||||

/*<replacement>*/

|

||||

|

||||

var pna = require('process-nextick-args');

|

||||

/*</replacement>*/

|

||||

|

||||

/*<replacement>*/

|

||||

var objectKeys = Object.keys || function (obj) {

|

||||

var keys = [];

|

||||

for (var key in obj) {

|

||||

keys.push(key);

|

||||

}return keys;

|

||||

};

|

||||

/*</replacement>*/

|

||||

|

||||

module.exports = Duplex;

|

||||

|

||||

/*<replacement>*/

|

||||

var util = Object.create(require('core-util-is'));

|

||||

util.inherits = require('inherits');

|

||||

/*</replacement>*/

|

||||

|

||||

var Readable = require('./_stream_readable');

|

||||

var Writable = require('./_stream_writable');

|

||||

|

||||

util.inherits(Duplex, Readable);

|

||||

|

||||

{

|

||||

// avoid scope creep, the keys array can then be collected

|

||||

var keys = objectKeys(Writable.prototype);

|

||||

for (var v = 0; v < keys.length; v++) {

|

||||

var method = keys[v];

|

||||

if (!Duplex.prototype[method]) Duplex.prototype[method] = Writable.prototype[method];

|

||||

}

|

||||

}

|

||||

|

||||

function Duplex(options) {

|

||||

if (!(this instanceof Duplex)) return new Duplex(options);

|

||||

|

||||

Readable.call(this, options);

|

||||

Writable.call(this, options);

|

||||

|

||||

if (options && options.readable === false) this.readable = false;

|

||||

|

||||

if (options && options.writable === false) this.writable = false;

|

||||

|

||||

this.allowHalfOpen = true;

|

||||

if (options && options.allowHalfOpen === false) this.allowHalfOpen = false;

|

||||

|

||||

this.once('end', onend);

|

||||

}

|

||||

|

||||

Object.defineProperty(Duplex.prototype, 'writableHighWaterMark', {

|

||||

// making it explicit this property is not enumerable

|

||||

// because otherwise some prototype manipulation in

|

||||

// userland will fail

|

||||

enumerable: false,

|

||||

get: function () {

|

||||

return this._writableState.highWaterMark;

|

||||

}

|

||||

});

|

||||

|

||||

// the no-half-open enforcer

|

||||

function onend() {

|

||||

// if we allow half-open state, or if the writable side ended,

|

||||

// then we're ok.

|

||||

if (this.allowHalfOpen || this._writableState.ended) return;

|

||||

|

||||

// no more data can be written.

|

||||

// But allow more writes to happen in this tick.

|

||||

pna.nextTick(onEndNT, this);

|

||||

}

|

||||

|

||||

function onEndNT(self) {

|

||||

self.end();

|

||||

}

|

||||

|

||||

Object.defineProperty(Duplex.prototype, 'destroyed', {

|

||||

get: function () {

|

||||

if (this._readableState === undefined || this._writableState === undefined) {

|

||||

return false;

|

||||

}

|

||||

return this._readableState.destroyed && this._writableState.destroyed;

|

||||

},

|

||||

set: function (value) {

|

||||

// we ignore the value if the stream

|

||||

// has not been initialized yet

|

||||

if (this._readableState === undefined || this._writableState === undefined) {

|

||||

return;

|

||||

}

|

||||

|

||||

// backward compatibility, the user is explicitly

|

||||

// managing destroyed

|

||||

this._readableState.destroyed = value;

|

||||

this._writableState.destroyed = value;

|

||||

}

|

||||

});

|

||||

|

||||

Duplex.prototype._destroy = function (err, cb) {

|

||||

this.push(null);

|

||||

this.end();

|

||||

|

||||

pna.nextTick(cb, err);

|

||||

};

|

||||

47

node_modules/are-we-there-yet/node_modules/readable-stream/lib/_stream_passthrough.js

generated

vendored

Normal file

47

node_modules/are-we-there-yet/node_modules/readable-stream/lib/_stream_passthrough.js

generated

vendored

Normal file

@@ -0,0 +1,47 @@

|

||||

// Copyright Joyent, Inc. and other Node contributors.

|

||||

//

|

||||

// Permission is hereby granted, free of charge, to any person obtaining a

|

||||

// copy of this software and associated documentation files (the

|

||||

// "Software"), to deal in the Software without restriction, including

|

||||

// without limitation the rights to use, copy, modify, merge, publish,

|

||||

// distribute, sublicense, and/or sell copies of the Software, and to permit

|

||||

// persons to whom the Software is furnished to do so, subject to the

|

||||

// following conditions:

|

||||

//

|

||||

// The above copyright notice and this permission notice shall be included

|

||||

// in all copies or substantial portions of the Software.

|

||||

//

|

||||

// THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS

|

||||

// OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF

|

||||

// MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN

|

||||

// NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM,

|

||||

// DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR

|

||||

// OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE

|

||||

// USE OR OTHER DEALINGS IN THE SOFTWARE.

|

||||

|

||||

// a passthrough stream.

|

||||

// basically just the most minimal sort of Transform stream.

|

||||

// Every written chunk gets output as-is.

|

||||

|

||||

'use strict';

|

||||

|

||||

module.exports = PassThrough;

|

||||

|

||||

var Transform = require('./_stream_transform');

|

||||

|

||||

/*<replacement>*/

|

||||

var util = Object.create(require('core-util-is'));

|

||||

util.inherits = require('inherits');

|

||||

/*</replacement>*/

|

||||

|

||||

util.inherits(PassThrough, Transform);

|

||||

|

||||

function PassThrough(options) {

|

||||

if (!(this instanceof PassThrough)) return new PassThrough(options);

|

||||

|

||||

Transform.call(this, options);

|

||||

}

|

||||

|

||||

PassThrough.prototype._transform = function (chunk, encoding, cb) {

|

||||

cb(null, chunk);

|

||||

};

|

||||

1019

node_modules/are-we-there-yet/node_modules/readable-stream/lib/_stream_readable.js

generated

vendored

Normal file

1019

node_modules/are-we-there-yet/node_modules/readable-stream/lib/_stream_readable.js

generated

vendored

Normal file

File diff suppressed because it is too large

Load Diff

214

node_modules/are-we-there-yet/node_modules/readable-stream/lib/_stream_transform.js

generated

vendored

Normal file

214

node_modules/are-we-there-yet/node_modules/readable-stream/lib/_stream_transform.js

generated

vendored

Normal file

@@ -0,0 +1,214 @@

|

||||

// Copyright Joyent, Inc. and other Node contributors.

|

||||

//

|

||||

// Permission is hereby granted, free of charge, to any person obtaining a

|

||||

// copy of this software and associated documentation files (the

|

||||

// "Software"), to deal in the Software without restriction, including

|

||||

// without limitation the rights to use, copy, modify, merge, publish,

|

||||

// distribute, sublicense, and/or sell copies of the Software, and to permit

|

||||

// persons to whom the Software is furnished to do so, subject to the

|

||||

// following conditions:

|

||||

//

|

||||

// The above copyright notice and this permission notice shall be included

|

||||

// in all copies or substantial portions of the Software.

|

||||

//

|

||||

// THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS

|

||||

// OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF

|

||||

// MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN

|

||||

// NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM,

|

||||

// DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR

|

||||

// OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE

|

||||

// USE OR OTHER DEALINGS IN THE SOFTWARE.

|

||||

|

||||

// a transform stream is a readable/writable stream where you do

|

||||

// something with the data. Sometimes it's called a "filter",

|

||||

// but that's not a great name for it, since that implies a thing where

|

||||

// some bits pass through, and others are simply ignored. (That would

|

||||

// be a valid example of a transform, of course.)

|

||||

//

|

||||

// While the output is causally related to the input, it's not a

|

||||

// necessarily symmetric or synchronous transformation. For example,

|

||||

// a zlib stream might take multiple plain-text writes(), and then

|

||||

// emit a single compressed chunk some time in the future.

|

||||

//

|

||||

// Here's how this works:

|

||||

//

|

||||

// The Transform stream has all the aspects of the readable and writable

|

||||

// stream classes. When you write(chunk), that calls _write(chunk,cb)

|

||||

// internally, and returns false if there's a lot of pending writes

|

||||

// buffered up. When you call read(), that calls _read(n) until

|

||||

// there's enough pending readable data buffered up.

|

||||

//

|

||||

// In a transform stream, the written data is placed in a buffer. When

|

||||

// _read(n) is called, it transforms the queued up data, calling the

|

||||

// buffered _write cb's as it consumes chunks. If consuming a single

|

||||

// written chunk would result in multiple output chunks, then the first

|

||||

// outputted bit calls the readcb, and subsequent chunks just go into

|

||||

// the read buffer, and will cause it to emit 'readable' if necessary.

|

||||

//

|

||||

// This way, back-pressure is actually determined by the reading side,

|

||||

// since _read has to be called to start processing a new chunk. However,

|

||||

// a pathological inflate type of transform can cause excessive buffering

|

||||

// here. For example, imagine a stream where every byte of input is

|

||||

// interpreted as an integer from 0-255, and then results in that many

|

||||

// bytes of output. Writing the 4 bytes {ff,ff,ff,ff} would result in

|

||||

// 1kb of data being output. In this case, you could write a very small

|

||||

// amount of input, and end up with a very large amount of output. In

|

||||

// such a pathological inflating mechanism, there'd be no way to tell

|

||||

// the system to stop doing the transform. A single 4MB write could

|

||||

// cause the system to run out of memory.

|

||||

//

|

||||

// However, even in such a pathological case, only a single written chunk

|

||||

// would be consumed, and then the rest would wait (un-transformed) until

|

||||

// the results of the previous transformed chunk were consumed.

|

||||

|

||||

'use strict';

|

||||

|

||||

module.exports = Transform;

|

||||

|

||||

var Duplex = require('./_stream_duplex');

|

||||

|

||||

/*<replacement>*/

|

||||

var util = Object.create(require('core-util-is'));

|

||||

util.inherits = require('inherits');

|

||||

/*</replacement>*/

|

||||

|

||||

util.inherits(Transform, Duplex);

|

||||

|

||||

function afterTransform(er, data) {

|

||||

var ts = this._transformState;

|

||||

ts.transforming = false;

|

||||

|

||||

var cb = ts.writecb;

|

||||

|

||||

if (!cb) {

|

||||

return this.emit('error', new Error('write callback called multiple times'));

|

||||

}

|

||||

|

||||

ts.writechunk = null;

|

||||

ts.writecb = null;

|

||||

|

||||

if (data != null) // single equals check for both `null` and `undefined`

|

||||

this.push(data);

|

||||

|

||||

cb(er);

|

||||

|

||||

var rs = this._readableState;

|

||||

rs.reading = false;

|

||||

if (rs.needReadable || rs.length < rs.highWaterMark) {

|

||||

this._read(rs.highWaterMark);

|

||||

}

|

||||

}

|

||||

|

||||

function Transform(options) {

|

||||

if (!(this instanceof Transform)) return new Transform(options);

|

||||

|

||||

Duplex.call(this, options);

|

||||

|

||||

this._transformState = {

|

||||

afterTransform: afterTransform.bind(this),

|

||||

needTransform: false,

|

||||

transforming: false,

|

||||

writecb: null,

|

||||

writechunk: null,

|

||||

writeencoding: null

|

||||

};

|

||||

|

||||

// start out asking for a readable event once data is transformed.

|

||||

this._readableState.needReadable = true;

|

||||

|

||||

// we have implemented the _read method, and done the other things

|

||||

// that Readable wants before the first _read call, so unset the

|

||||

// sync guard flag.

|

||||

this._readableState.sync = false;

|

||||

|

||||

if (options) {

|

||||

if (typeof options.transform === 'function') this._transform = options.transform;

|

||||

|

||||

if (typeof options.flush === 'function') this._flush = options.flush;

|

||||

}

|

||||

|

||||

// When the writable side finishes, then flush out anything remaining.

|

||||

this.on('prefinish', prefinish);

|

||||

}

|

||||

|

||||

function prefinish() {

|

||||

var _this = this;

|

||||

|

||||

if (typeof this._flush === 'function') {

|

||||

this._flush(function (er, data) {

|

||||

done(_this, er, data);

|

||||

});

|

||||

} else {

|

||||

done(this, null, null);

|

||||

}

|

||||

}

|

||||

|

||||

Transform.prototype.push = function (chunk, encoding) {

|

||||

this._transformState.needTransform = false;

|

||||

return Duplex.prototype.push.call(this, chunk, encoding);

|

||||

};

|

||||

|

||||

// This is the part where you do stuff!

|

||||

// override this function in implementation classes.

|

||||

// 'chunk' is an input chunk.

|

||||

//

|

||||

// Call `push(newChunk)` to pass along transformed output

|

||||

// to the readable side. You may call 'push' zero or more times.

|

||||

//

|

||||

// Call `cb(err)` when you are done with this chunk. If you pass

|

||||

// an error, then that'll put the hurt on the whole operation. If you

|

||||

// never call cb(), then you'll never get another chunk.

|

||||

Transform.prototype._transform = function (chunk, encoding, cb) {

|

||||

throw new Error('_transform() is not implemented');

|

||||

};

|

||||

|

||||

Transform.prototype._write = function (chunk, encoding, cb) {

|

||||

var ts = this._transformState;

|

||||

ts.writecb = cb;

|

||||

ts.writechunk = chunk;

|

||||

ts.writeencoding = encoding;

|

||||

if (!ts.transforming) {

|

||||

var rs = this._readableState;

|

||||

if (ts.needTransform || rs.needReadable || rs.length < rs.highWaterMark) this._read(rs.highWaterMark);

|

||||

}

|

||||

};

|

||||

|

||||

// Doesn't matter what the args are here.

|

||||

// _transform does all the work.

|

||||

// That we got here means that the readable side wants more data.

|

||||

Transform.prototype._read = function (n) {

|

||||

var ts = this._transformState;

|

||||

|

||||

if (ts.writechunk !== null && ts.writecb && !ts.transforming) {

|

||||

ts.transforming = true;

|

||||

this._transform(ts.writechunk, ts.writeencoding, ts.afterTransform);

|

||||

} else {

|

||||

// mark that we need a transform, so that any data that comes in

|

||||

// will get processed, now that we've asked for it.

|

||||

ts.needTransform = true;

|

||||

}

|

||||

};

|

||||

|

||||

Transform.prototype._destroy = function (err, cb) {

|

||||

var _this2 = this;

|

||||

|

||||

Duplex.prototype._destroy.call(this, err, function (err2) {

|

||||

cb(err2);

|

||||

_this2.emit('close');

|

||||

});

|

||||

};

|

||||

|

||||

function done(stream, er, data) {

|

||||

if (er) return stream.emit('error', er);

|

||||

|

||||

if (data != null) // single equals check for both `null` and `undefined`

|

||||

stream.push(data);

|

||||

|

||||

// if there's nothing in the write buffer, then that means

|

||||

// that nothing more will ever be provided

|

||||

if (stream._writableState.length) throw new Error('Calling transform done when ws.length != 0');

|

||||

|

||||

if (stream._transformState.transforming) throw new Error('Calling transform done when still transforming');

|

||||

|

||||

return stream.push(null);

|

||||

}

|

||||

687

node_modules/are-we-there-yet/node_modules/readable-stream/lib/_stream_writable.js

generated

vendored

Normal file

687

node_modules/are-we-there-yet/node_modules/readable-stream/lib/_stream_writable.js

generated

vendored

Normal file

@@ -0,0 +1,687 @@

|

||||

// Copyright Joyent, Inc. and other Node contributors.

|

||||

//

|

||||

// Permission is hereby granted, free of charge, to any person obtaining a

|

||||

// copy of this software and associated documentation files (the

|

||||

// "Software"), to deal in the Software without restriction, including

|

||||

// without limitation the rights to use, copy, modify, merge, publish,

|

||||

// distribute, sublicense, and/or sell copies of the Software, and to permit

|